First Open Science Retreat: On the Future of Research Evaluation

The ZBW's Open Science Retreat invites various stakeholders from all over the world to engage in a dialogue on openness, transparency, and science communication in the digital age. The first retreat started with the topic "Research Evaluation - Promoting the Open Science movement". We summarise the discussions and describe the visions that have been developed by the participants.

by Anna Maria Höfler, Isabella Peters, Guido Scherp, Doreen Siegfried and Klaus Tochtermann

Open Science is known to cover a broad range of topics. The implementation of open practices is quite complex and requires involving various stakeholders, including research performing organisations, libraries and research infrastructures, publishers and service providers, and policy makers. Among other things, regular exchange and networking within and between these groups are necessary to accompany and support efforts towards more openness – ultimately, transforming them into a joint and global movement.

That is why the ZBW – Leibniz Information Centre for Economics has now launched the international “Open Science Retreat” as a new online exchange and networking format to discuss current and global challenges in the implementation of Open Science and the shared vision of an Open Science ecosystem. The format aims especially at the stakeholder groups mentioned above.

Within two compact sessions over two consecutive days, around 30 international experts from the different stakeholder groups are given the opportunity to dive deeper into a specific topic, to share their own expertise and experience, and to record their thoughts on this. The first Open Science Retreat took place at the end of October 2021 and focused on the “Research Evaluation – Promoting the Open Science movement”.

Four provocations on the evaluation of Open Science

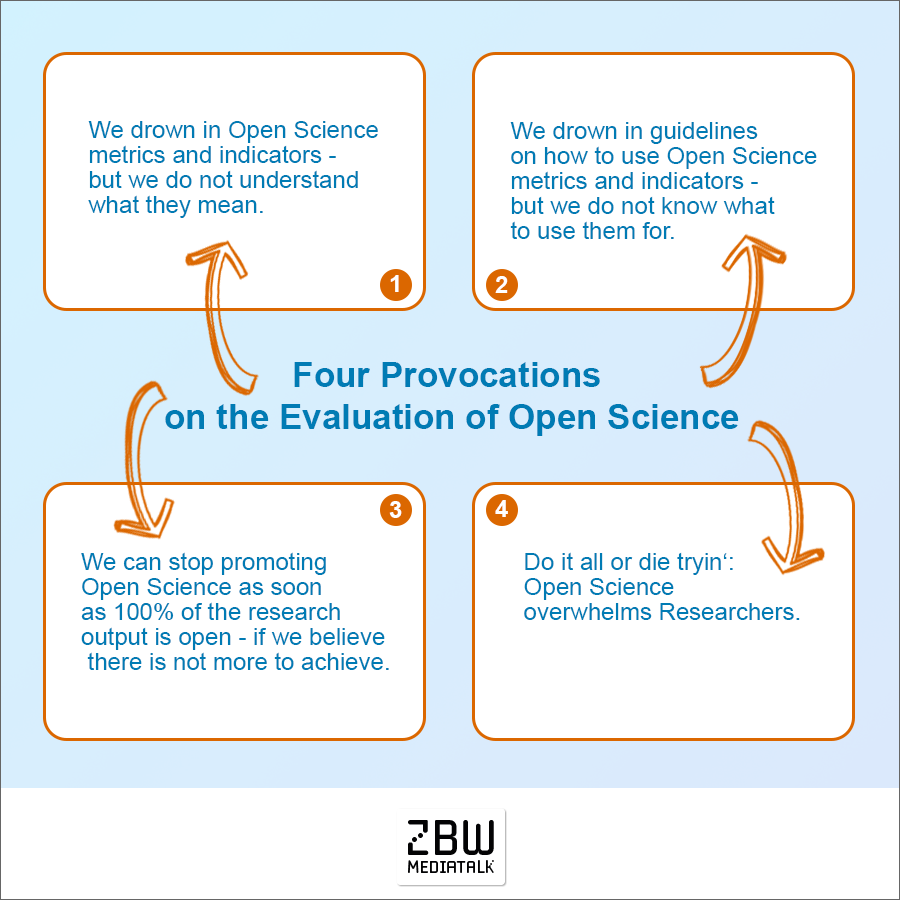

To start off the discussion, Isabella Peters (ZBW – Leibniz Information Centre for Economics) reflected in an impulse talk on the extent to which open practices are currently recognised in research evaluation. Therefore, she formulated four provocations:

Four Provocations for the evaluation of Open Science.

- We drown in Open Science metrics and indicators – but we do not understand what they mean There are a plethora of approaches and recommendations on how to evaluate Open Science and which indicators might be suitable for assessing Open Science practices, for evaluating Open Science careers, for monitoring the progress towards the great amount of research products that are openly available. There are even automatic Open Science assessment tools on the market already. There is a well-equipped suite of Open Science indicators and metrics, which can be used to provide quantitative summaries about the research landscape and Open Science. However, there is a clear lack of contextualisation of these metrics and a lack of understanding of what the indicators indicate, and what they really mean for the research community.

- We drown in guidelines on how to use Open Science metrics and indicators – but we do not know what to use them for. There is a huge flower bouquet of guidelines and recommendations on how to bring Open Science indicators into practice. All of them discuss under which circumstances metrics and indicators are useful tools and when they are able to provide reasonable insights. And all of them list the circumstances under which indicators are not the right tools, for example, when they are not used responsibly. The key of responsible use is the alignment of the goals and the context of the evaluation with the suitable indicators. In terms of Open Science or in terms of promoting Open Science often neither the goals nor the alignment strategy is clear.

- We can stop promoting Open Science as soon as 100% of the research output is open – if we believe there is not more to achieve. If we understand Open Science as research outputs made openly available, with the right incentives, e.g. funding, the Open Science journey may end sooner than thought. However, often more and other features are associated with openness, e.g. the reproducibility or the credibility of science which is believed to be increased through Open Science practices. These goals of Open Science are not yet addressed by most of the proposed Open Science indicators. There is a lack of a community-driven discussion of what goals should actually be achieved with Open Science.

- Do it all or die tryin‘: Open Science overwhelms researchers. Sometimes it seems that there is only all or nothing in Open Science – at least only ALL is rewarded – the others have to justify themselves. Who is a good open scientist anyway – who is an Open Science champion? Is it only this researcher that reaches 100% or high scores in all Open Science indicators that have been proposed? Propagating the all-or-nothing-principle might be counterproductive. Researchers do not start doing Open Science because doing everything and targeting 100% everywhere in every indicator is neither practical nor possible for them.

Open Science and research evaluation: what have we (not) learned?

Taking up this impulse, the participants were divided into two breakout groups on the first day to reflect on the last ten years of Open Science and to discuss the question: What do we already know about Open Science and research evaluation and what do we not know?

One result of the discussion was that even if the attention on Open Science has been achieved and its general values with respect to research integrity and good science practice are largely accepted, the term and its implementation seem still unclear (with the risk of ‘open washing’) for many. A coherent definition and understanding of what Open Science is still lacking. And what are the goals and expectations, and consequently what shall be measured, why and for whom (cui bono?)? This is associated with the general question: What is quality of research and what is good quality of research? Consequently, the required change of research culture takes longer than necessary. And it seems useful to talk more to people who don’t see the point in Open Science practices. Their reasons against Open Science could provide more insights to remove the last barriers.

The participants further discussed the role of metrics. Open Science and subsequently metrics need a subject- and discipline-specific consensus. A common set of metrics for all scientific disciplines has not proven to be realistic. Metrics that fit certain disciplines and needs can be a good, and maybe in the beginning small, starting point to advance open practices. A lesson learned from (citation-based) bibliometrics (in which we are somehow trapped) is that applying metrics rather at the institutional and group level instead on the level of individual researchers is seen as a successful way to promote open practices and culture change. This also provides a better way to measure Open Science assets such as collaboration. And besides measuring the research outcome or outputs, also procedural criteria (methodology) and further forms of contributions (including negative results) should be evaluated, of course. In the end, however, there is still a lack of indicators to assess the quality of research – a challenge that traditional ‘closed science’ metrics also face.

Finally, another outcome of the discussion is that financing Open Science, especially in the transition phase, is still unclear. A model for its sustainable funding and its integration into regular research funding is still an open issue. In this regard, this is also an issue of equity in accessibility to Open Science as a public common good, as addressed in the UNESCO Recommendation on Open Science.

Vision: How could an Open Science world look like in ten years?

On the second day of the Open Science Retreat, participants were again divided into two breakout groups. They were asked to develop a vision of how an Open Science ecosystem would look like in ten years, when research evaluation has been successful in promoting open practices. The discussions revolved around the following topics, all of which have to be accompanied by a change in attitude.

Types of publications: There will be a tendency towards “micro-publications” (i. e. information is shared when it is available) and machine-based approaches for evaluating these micro-publications should be applied. However, differentiation might be necessary for the various scientific disciplines.

Attributions to authors vs. institutions: Publications should be assigned to institutions rather than individuals and corresponding mechanisms are needed to clearly attribute/credit the individual contributions. “Negative” results should of course be published as a contribution to science. Furthermore, the role of reviewers should be attributed as contributors to the improvement of scientific work.

Shifts in roles of stakeholders: New ways of publishing processes to increase transparency and a separate review process should be applied. And the question should be solved: do we want funders to be involved in the evaluation and review process (independent of reputation)? There is a danger of assigning too much power to one stakeholder (e. g. funders). Thus, a future Open Science ecosystem has to be in control of the “checks and balances”.

Policies regarding research evaluation: They should be open and FAIR based on the question: who is supposed to share what in which time frame? Therefore, tools need to be in place to evaluate whether these policies meet FAIR and transparency criteria (e.g. currently there is no document version control, no DOI for call for tenders, etc.).

Finally, each participant was asked to formulate an own vision of a future Open Science world as concisely as possible in the form and length of a ‘tweet’. A voting derived the following top aspects for this vision:

- In ten years sharing data and software has become an essential component of good scientific practice. Responsible data management to ensure data quality is part of students’ curriculum from the very beginning.

- An efficient research data management infrastructure of tools and support is available for everybody. Adequate funding is provided to cover efforts for Open Science.

- Data and software publications have become “full citizens” of the publication world, contributing to researchers’ reputation.

- Reproducibility of research results is achieved.

- Publishing negative results will be the normal, researchers will not be blamed for them.

- People and open infrastructure are funded, not projects. They are evaluated based on the past performance.

- As research is open, it is fully evaluated by external entities thereby removing internal politics.

- The term “Open Science” is a thing of the past, since research in science and other fields has opened up such that the open/closed distinction is only necessary when there are good reasons for not sharing, but then at least these reasons are shared in an open and FAIR way.

Save the date for upcoming Open Science Retreats

The discussions were of course much more extensive and complex than can be presented here. And the participants themselves documented a great amount. All tweets and more can be read in anonymised form in the corresponding collaborative pad.

Skip to PDF contentYou are an Open Science advocate and would like to exchange your experiences and ideas with a group of 30 like-minded international Open Science enthusiasts from completely different domains? The dates for the next two retreats, both events will of course take place online, and their topics are already set.

- 15/16 February 2022: Sustainable and reliable Open Science Infrastructures and Tools

- 14/15 June 2022: Economic actors in the context of Open Science – The role of the private sector in the field of Open Science

This might also interest you:

About the authors:

Dr Anna Maria Höfler is coordinator for science policy activities. Her key activities are Research Data and Open Science.

Portrait, photographer: Rupert Pessl©

Prof. Dr Isabella Peters is Professor of Web Science. Her key activities are Social Media and Web 2.0 (in particular user-generated content), Science 2.0, Scholarly communication on the Social Web, Altmetrics, DFG project “*metrics”, Knowledge representation, Information Retrieval.

Portrait: ZBW©

Dr Guido Scherp is Head of the “Open-Science-Transfer” department at the ZBW – Leibniz Information Centre for Economics and Coordinator of the Leibniz Research Alliance Open Science.

Portrait: ZBW©, photographer: Sven Wied

Dr Doreen Siegfried is Head of Marketing and Public Relations.

Portrait: ZBW©

Professor Dr Klaus Tochtermann is Director of the ZBW – Leibniz Information Centre for Economics. For many years he has been committed to Open Science on a national and international level. He is a Member of the Board of Directors of the EOSC Association (European Open Science Cloud).

Portrait: ZBW©, photographer Sven Wied

View Comments

User Experience in Libraries: Insights from the SLU University Library Sweden

“To observe a student or researcher using a service is a quite powerful, sometimes...