Digital Library: Simulation of User Interactions to Optimise Literature Searches

If you want to optimise the user experience in literature searches, this has previously required lengthy, expensive tests with real users. The goal of the SINIR project was to significantly reduce testing and development when changing library search systems by using simulated user interactions. Using this approach, it should be possible to anticipate and evaluate changes in such search systems in terms of their actual impact before productive introduction.

we were talking with Timo Borst

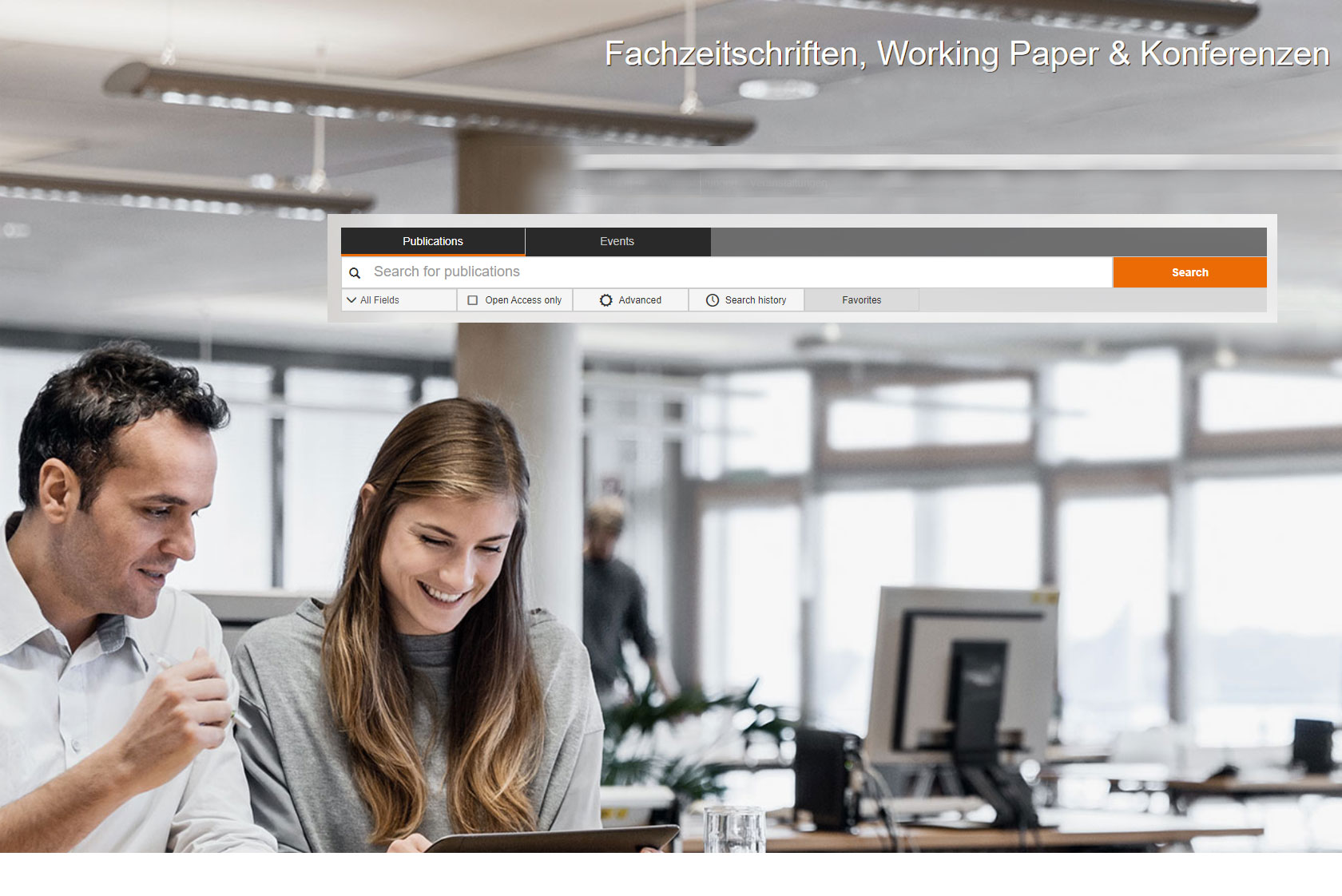

The project of the German Research Foundation (DFG) SINIR (‘Simulating Interactive Information Retrieval’) began on 1 October 2019. It is a collaborative project between the ZBW – Leibniz Information Centre for Economics, the universities of Weimar and Passau, and Christin Seifert from the University of Twente as an associated partner. In this project, processes for simulating user interactions within the context of the specialist economics portal EconBiz will be researched and developed over the next three years. We spoke about the project to Dr Timo Borst, who is head of the Department Innovative Information Systems and Publishing Technologies (IIPT) at the ZBW – Leibniz Information Centre for Economics.

The project of the German Research Foundation (DFG) SINIR (‘Simulating Interactive Information Retrieval’) began on 1 October 2019. It is a collaborative project between the ZBW – Leibniz Information Centre for Economics, the universities of Weimar and Passau, and Christin Seifert from the University of Twente as an associated partner. In this project, processes for simulating user interactions within the context of the specialist economics portal EconBiz will be researched and developed over the next three years. We spoke about the project to Dr Timo Borst, who is head of the Department Innovative Information Systems and Publishing Technologies (IIPT) at the ZBW – Leibniz Information Centre for Economics.

Was ist das Ziel bei SINIR?

By using Google and other similar search engines on a daily basis, users have naturally come to expect that the results shown are some of the most relevant to their search query. They also essentially transfer this expectation to a specialist search portal such as EconBiz, where they can execute or initiate further actions after carrying out a search: They can refine the results or their search using query reformulation, view individual results, download the accompanying publication or otherwise access (protected) documents. We mainly pursue two goals within the context of these usage scenarios:

The first goal is to gain a reliable database of the above-mentioned activities. When designing user interactions that are managed via a web interface such as EconBiz’s interface, we largely rely on industry standards and conventions, and on functionalities provided via templates, for example, by search portal technologies such as the community project VuFind. However, from an empirical perspective, for instance based on the systematic collection and evaluation of user data, we do not know exactly whether user interactions actually take place or in fact meet user expectations in the same way we can only assume they do. So, as an example, users might find something like previewing a document – by showing at least the first page of an article or a table of contents of the works – useful. But until now, we have lacked the framework to clearly formulate and then of course test hypotheses relating to optimised interface design at all, for example such that they are comprehensible from an empirical perspective.

In addition to this methodological aspect, SINIR also pursues a goal relating to content: The goal here is to model and simulate existing or planned user interactions such that better forecasts can be made with respect to the expected user effect compared with a partly economic cost/gain effect. Sticking with the example given above: with SINIR, we want to be able to introduce and test the preview functionality in a simulation environment before we actually introduce such a functionality into the production system. This is because the latter often requires very profound intervention which could annoy or overwhelm users and which also does not give the desired or planned positive effects. However, in the latter case, it is often difficult to undo changes once they have been made. As such, SINIR should help to find wrong turns in interaction design as early as possible and, ideally, fundamentally shorten innovation cycles when developing a digital library like EconBiz.

What specific improvements is SINIR striving towards?

As already discussed, SINIR should initially fundamentally help to shorten innovation cycles when developing a digital library and search application like EconBiz. This is because such innovations are subject to conditions that make them difficult — if not impossible — from the outset. Such innovations are based on intervening in the user experience, where it has previously only been possible to evaluate this using A/B tests and usually only for major search engines. However, A/B tests can only be run for digital libraries in their respective environment to a limited extent, where such libraries have a user base that is generally smaller and relatively homogeneous. As such, we hope to use SINIR to demonstrate a specific way in which A/B tests can be supported by simulated user interactions at least to the extent that only the most promising ‘system candidates’ are considered from the outset for real use tests and field experiments.

What is the approach?

The first step involves defining a ‘baseline’, for instance the information system’s status including data and lists of search results (sorted by relevance or rating), which can be used as a starting point for simulations and evaluations. For specific usage analyses, we use query logs, which include the search query or the reformulation of the search query, and event-related interaction logs, which document the user’s interaction with the retrieval system. Typical events of this type relate to a list of search results and consist of user actions such as selecting a facet, selecting a single result or downloading a document. We abstract user models from these interactions, which can effectively be used as a starting point for simulations and, if necessary, any optimisations that can be derived. A key concern here is the relationship between the original search query, the search result in the form of a sorted list of results and subsequent user interactions: As the sequence of these interactions can differ considerably in length and variance depending on the sorting order, the relevance model on which this order is based can be of significant importance for evaluating and optimising an information retrieval system (IR system).

We try to use our simulations to predict — not replace — specific interaction behaviour. With this in mind, we provide test users with tasks in the form of articulated information needs, which users are required to meet as best as possible by interacting with the previously simulated IR system. As an example, such information needs may include the question ‘What are the effects of Brexit on the European Economic Area?’. Depending on how this query is communicated with the system, the test user receives a list of search results sorted by relevance as a starting point for further interactions – right up to accessing or downloading a document, for example the presumed outcome and the end of the user’s interaction. Information needs – the starting point for analysing and evaluating each IR system – cannot be retrieved directly from usage data, so we reconstruct or formulate them in three different ways: firstly, as a collection of downloaded documents that meet the information need, secondly as a prosaic description or task, or thirdly as an initial search query which would then have to be further refined.

How do you simulate user interactions?

One of the things we do is generate the user models already mentioned in order to be able to simulate specific interaction behaviour. Such user models include interactions or sequences that are typical for an IR system and are predetermined by its functionality, such as running a search query, receiving a search result in the form of a list or selecting a result from the list. We now understand that the simulated search process is more or less an ordered sequence of these interactions, where this sequence does not remain static but can change in light of information already found. So, for example, the probability that a result is re-selected from a list could be reduced if this was previously the case and other results have since been activated.

What special challenges does SINIR face? How do you want to approach them?

As is always the case when simulating human behaviour, the question of whether and to what extent simulated interactions reflect actual interactions in the sense that they can predict or even replace the latter, is a question that naturally arises for us within the context of the project. In principle, such simulations offer various added value, such as being able to supplement time-consuming A/B tests or filter them in advance, or not having to collect or keep actual user data for longer than necessary, or being in a position where you don’t have to run the tests at all in other environments. Here, in the course of the project, we simply have to see whether and how we are able to simulate user interactions in such a way that they result in predictive models that are valid from an empirical perspective.

Dr Timo Borst is head of the Innovative Information Systems and Publishing Technologies department at the ZBW – Leibniz Information Centre for Economics. He researches open bibliographic data and systems that serve their creation, processing, standardisation and linking. These include in-house ZBW applications as well as external data hubs such as Wikidata. He can also be found on LinkedIn, ORCID, ResearchGate and Twitter.

Portrait: ZBW©

View Comments

Researcher Profiles in EconBiz: Semi-Automated Generation Based on Linked Open Data

The EconBiz Author Profiles show the most important research areas of an author in...